2020 Bitcoin Node Performance Tests

Testing full validation syncing performance of seven Bitcoin node implementations.

As I’ve noted many times in the past, backing your bitcoin wallet with a fully validating node gives you the strongest security model and privacy model that is available to Bitcoin users. Two years ago I started running an annual comprehensive comparison of various implementations to see how well they performed full blockchain validation. Now it's time to see what has changed over the past year!

The computer I use as a baseline is high-end but uses off-the-shelf hardware. I bought this PC at the beginning of 2018. It cost about $2,000 at the time. I'm using a gigabit internet connection to ensure that it is not a bottleneck.

Just set up a maxed out @PugetSystems PC on PureOS 8.0.

— Jameson Lopp (@lopp) February 11, 2018

Core i7 8700 3.2GHz 6 core CPU

32 GB DDR4-2666

Samsung 960 EVO 1TB M.2 SSD

Synced Bitcoin Core 0.15.1 (w/maxed out dbcache) in 162 min w/peak speeds of 80 MB/s. Next step: see how much traffic I can serve on gigabit fiber. pic.twitter.com/QOvuwPgCgy

Note that no Bitcoin implementation strictly fully validates the entire chain history by default. As a performance improvement, most of them don’t validate signatures before a certain point in time. This is done under the assumption that it would be very difficult to get a fraudulent block hash through peer review when updating the code.

For the purposes of these tests I need to control as many variables as possible; some implementations may skip signature checking for a longer period of time in the blockchain than others. As such, the tests I'm running do not use the default settings - I change one setting to force the checking of all transaction signatures and I often tweak other settings in order to make use of the higher number of CPU cores and amount of RAM on my machine.

The amount of data in the Bitcoin blockchain is relentlessly increasing with every block that is added, thus it's a never-ending struggle for node implementations to continue optimizing their code in order to prevent the initial sync time for a new node from becoming obscenely long. After all, if it becomes unreasonably expensive or time consuming to start running a new node, most people who are interested in doing so will chose not to, which weakens the overall robustness of the network.

Last year's test was for syncing to block 601,300 while this year's is syncing to block 655,000. This is a data increase of 25% from 246.5GB to 307.8GB. As such, we should expect implementations that have made no performance changes to take about 25% longer to sync.

What's the absolute best case syncing time we could expect? Since you have to perform over 1.6 billion ECDSA verification operations in order to reach block 655,000:

On my benchmark machine the most recent version of libsecp257k1 is able to perform an ECDSA verification in around 7000 nanoseconds. So about 3 hours.

— Jameson Lopp (@lopp) November 2, 2020

On to the results!

Bcoin v2.1.2

Full validation sync of @Bcoin v2.1.2 to block 655,000 completed in 21 hours, 52 minutes on my benchmark machine. Still doesn't seem to be taking full advantage of all CPU cores; they hover around 50% usage.

— Jameson Lopp (@lopp) November 6, 2020

If you read last year's report you may recall that Bcoin won the "most improved" award for the variety of optimizations they implemented. It looks like this year brought forth some minor performance optimizations and as a result the sync time has increased by 18% rather than the expected 25%.

I suspect that the current bottleneck lies in the peer / network management as my CPU / RAM / disk I/O never maxed out from what I could tell. If memory serves, bcoin is rather limited by the RAM it can use due to nodejs heap size limits. CPU and disk I/O hovered around 50% usage the whole time. Perhaps some better "pending block" queue management would help feed more work to the node? Or there may be some other issue with the worker logic that limits it to the number of physical cores rather than making use of all virtual cores.

My bcoin.conf:

checkpoints:false

cache-size:10000

sigcache-size:1000000

max-files:5000

Just for fun to see if I could force bcoin to use all my virtual cores, I did a second sync test with "workers-size:12" but it ended up taking 27 hours, so this clearly only created more contention between the worker threads rather than resulting in work being processed faster.

Bitcoin Core 0.21

It took 341 minutes to sync Bitcoin Core 0.21 from genesis to block 655,000 with assumevalid=0 on my benchmark machine. 🚀Endomorphism FTW🚀

— Jameson Lopp (@lopp) November 6, 2020

Astute readers may notice that Bitcoin Core 0.21 hasn't been released yet; I built it from the master branch which is currently under a feature freeze for the upcoming 0.21 release. Testing Bitcoin Core was a breeze as usual; once again the bottleneck was definitely CPU. The big change with this release is the enabling of GLV Endomorphism in the secp256k1 library, for which I performed a separate round of benchmarks earlier.

I ran 4 different syncs of Bitcoin Core to benchmark the real-world performance improvements offered by enabling GLV Endomorphism. The results were better than expected!

— Jameson Lopp (@lopp) September 28, 2020

Default sync: 18% faster

Full verification of all historical signatures sync: 28% faster. pic.twitter.com/wJID8nZfNs

My bitcoind.conf:

assumevalid=0

dbcache=24000

maxmempool=1000

btcd v0.21.0-beta

Full validation sync of btcd v0.21.0-beta to block 655,000 took my benchmark machine 3 days, 11 hours, 31 minutes. CPU-bound the entire time; it made over 7TB of disk writes due to not caching UTXOs in RAM.

— Jameson Lopp (@lopp) November 6, 2020

While btcd made a ton of improvements when it came "back from the grave" last year, it looks like some other improvements have happened since then. It only took 11% longer to sync 25% more data, so they are still fighting back against the increasing resource requirements. I do believe there is a lot of room left for improvement, though - especially in terms of UTXO caching. Due to the amount of disk I/O, I expect sync time for btcd on a spinning disk would be far longer.

My btcd.conf:

nocheckpoints=1

sigcachemaxsize=1000000

Gocoin 1.9.8

Full validation sync of Gocoin 1.9.8 with secp256k1 library enabled took my benchmark machine 7 hours, 38 minutes to get to block 655,000. 🚀🚀🚀 CPU bottleneck has been fixed + endomorphism FTW!🚀🚀🚀

— Jameson Lopp (@lopp) November 6, 2020

I made the following changes to get maximum performance according to the documentation:

- Installed secp256k1 library and built gocoin with sipasec.go

- Disabled wallet functionality via

AllBalances.AutoLoad:false

My gocoin.conf:

LastTrustedBlock: 00000000839a8e6886ab5951d76f411475428afc90947ee320161bbf18eb6048

AllBalances.AutoLoad:false

UTXOSave.SecondsToTake:0

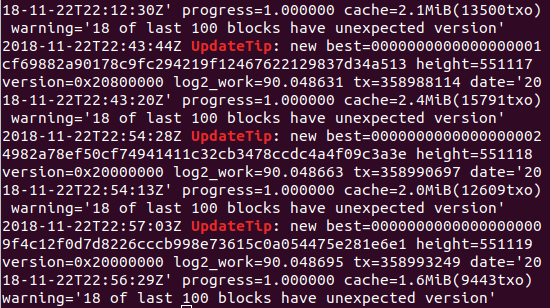

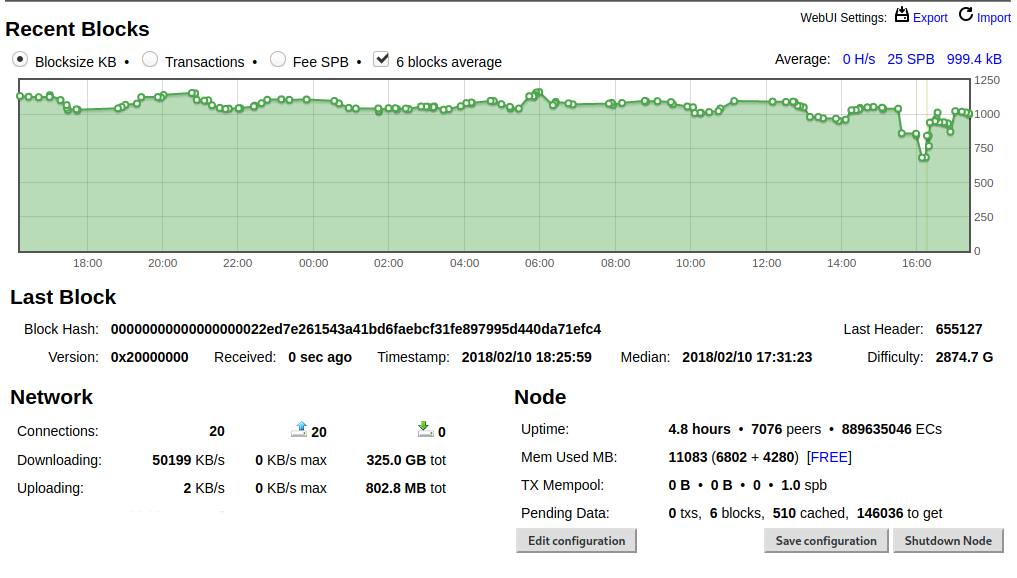

I noticed pretty quickly that the download bandwidth usage was insanely high; I saw speeds over 50 MB/s. It looks like Gocoin has probably made some major improvements to their peer management. I also noticed that it looks like Gocoin has fixed the CPU bottleneck I hit in previous tests; no longer were my CPU cores stuck below 50% usage but rather they hovered around 80%.

Gocoin still has the best dashboard of any node implementation.

After my first sync completed in 8 hours, 42 minutes - over twice the speed of last year's test, I remembered something - I hadn't recompiled the secp256k1 library and thus was using a version without the GLV Endormorphism optimization! It turns out Gocoin even has some ECDSA benchmark tests that you can run quite easily. I ran them several times and got a result of each ECDSA verification taking ~11,000 nanoseconds. Then I updated and recompiled libsecp256k1 and the benchmark tests showed each verification taking ~7,000 nanoseconds. So I wiped the data directory and started a new sync...

The new sync completed in 7 hours and 38 minutes - 12% faster than with the old secp256k1 library. It wasn't the ~28% throughput increase I was hoping to match Bitcoin Core's, but it's still a noteworthy improvement. My initial guess would be that it's due to Golang not having as many optimizations as C++.

Libbitcoin Node 3.6.0

Full validation sync of Libbitcoin Node 3.6.0 to block 655,000 took my machine 36 hours, 48 minutes with a 100,000 UTXO cache.

— Jameson Lopp (@lopp) November 11, 2020

Libbitcoin Node wasn’t too bad — the hardest part was removing the hardcoded block checkpoints. In order to do so I had to clone the git repository, checkout the “version3” branch, delete all of the checkpoints other than genesis from bn.cfg, and run the install.sh script to compile the node and its dependencies.

My libbitcoin config:

[network]

threads = 12

outbound_connections = 20

[blockchain]

# I set this to the number of virtual cores since default is physical cores

cores = 12

[database]

cache_capacity = 100000

[node]

block_latency_seconds = 5

After running for 30 hours I noticed something was amiss. The node was only at block 555,000 while I expected it to be over 600,000 based on last year's test. The particularly weird thing was that the node had downloaded over 2.7TB of data while the entire blockchain is only around 308GB. My conclusion was that by bumping up the network threads and/or outbound connections I had run into an issue of poor networking resource management. So I wiped the node and started a second run with the exact same config as last year.

[blockchain]

# I set this to the number of virtual cores since default is physical cores

cores = 12

[database]

cache_capacity = 100000

[node]

block_latency_seconds = 5

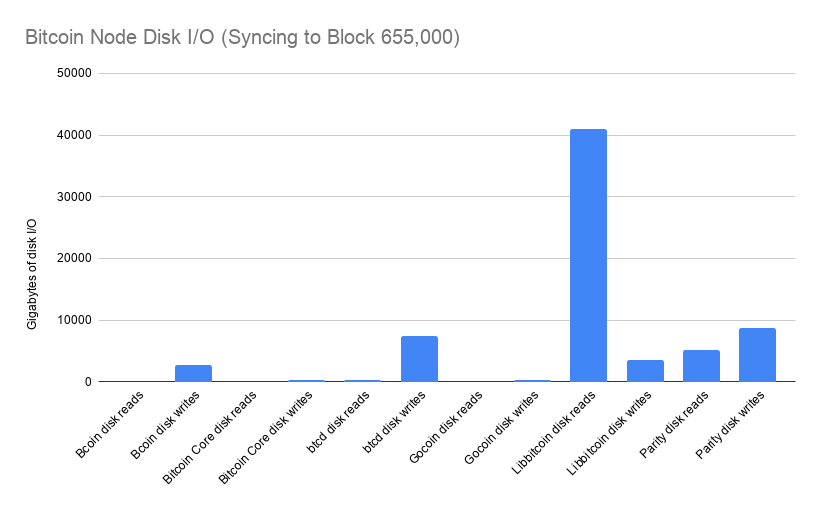

I noted that during the course of syncing, Libbitcoin Node wrote 3.5 TB and read over 41TB to and from disk. Total writes look reasonable but the total reads are much higher than with other implementations. Seems there's probably more caching improvements that could be made; it was only using 6 GB of RAM by the end of the sync. While it was using all the CPU cores, they were only hovering around 30% - I suspect the bottleneck is disk I/O as I was seeing disk reads hovering in the 150 MB/s to 220 MB/s range.

It's worth noting that Libbitcoin Node can't benefit from the endormorphism optimization that some other implementations have enabled this year... because it enabled endomorphism years ago!

A particularly odd issue I've never seen before was that on a regular basis (several times an hour) my entire home network would grind to a halt while syncing libbitcoin. Every time I noticed this happening I'd check my ASUS RT-AC88U router's dashboard and saw that the primary CPU was pegged at 100% even though none of the devices on my network were using substantial bandwidth. It's not clear to me what network operations libbitcoin could be performing that are so CPU intensive for the router.

Parity Bitcoin master (commit 7fb158d)

Full validation sync of Parity Bitcoin (commit f635966) to block 655,000 on my benchmark machine took 2 days, 11 hours, 22 minutes.

— Jameson Lopp (@lopp) November 11, 2020

Parity is an interesting implementation that seems to have the bandwidth management figured out well but is still lacking on the CPU and disk I/O side. CPU usage stays below 50%, possibly due to lack of hyperthreading support, but it was using a lot of the cache I made available — 23GB out of the 24GB I allocated! The real bottleneck appears to be disk I/O - for some reason it was constantly churning 100 MB/s in disk reads and writes even though it was only adding about 2 MB/s worth of data to the blockchain. It's weird that this much disk activity is being performed despite the 21GB of cache.

There are probably some inefficiencies in Parity’s internal database (RocksDB) that are creating this bottleneck. I’ve had issues with RocksDB in the past while running Parity’s Ethereum node and while running Ripple nodes. RocksDB was so bad that Ripple actually wrote their own DB called NuDB. My pbtc config:

— btc

— db-cache 24000

— verification-edge 00000000839a8e6886ab5951d76f411475428afc90947ee320161bbf18eb6048

Stratis 3.0.6

Syncing the @stratisplatform Bitcoin node has been pretty disappointing. After 4 days, 3 hours it only reached block 428,002 and then crashed due to running out of disk space. Turns out the node doesn't delete UTXOs from disk once they're spent. 77GB of blocks and 632GB of UTXOs!

— Jameson Lopp (@lopp) November 2, 2019

Last year's Stratis sync was a failure. Technically they have not made a release since then, though there is a 3.0.6 pre-release from December 2019 that I decided to try, mainly because it promised a 13X wallet sync speedup. It turns out that this does not apply to node syncing.

My Stratis bitcoin.conf:

assumevalid=0

checkpoints=0

maxblkmem=1000

maxcoinviewcacheitems=1000000

However, CPU was the bottleneck by far - once it got to block 300,000 the processing slowed down to just a couple blocks per second even though the queue of blocks to be processed was full and there was little disk activity. There were many times when only 1 of my 12 cores was being used, so it looks like there is plenty of room for parallelization improvement.

Unfortunately, a critical unresolved issue is that their UTXO database doesn't seem to actually delete UTXOs and you'll need several terabytes of disk space in comparison to most Bitcoin implementations today that use under 400GB. As such, I was unable to complete the sync because my machine only has a 1 TB drive.

Disk Resource Usage

Reading and writing data to disk is one of the slowest operations you can ask a computer to perform. As such, node implementations that keep more data in fast-access RAM will likely outperform those that have to interact with disks. In this regard Libbitcoin is an odd beast because we know that it has a UTXO cache and it's still middle of the pack in terms of total syncing performance despite the absurd amount of disk reads it performs. I can only imagine how blazing fast it would be if those dropped down to a level under 1 GB similar to Bitcoin Core/Gocoin. It's worth noting that some implementations like Bcoin may be underreported due to spawning child processes whose resource usage doesn't get aggregated up to the master process.

Performance Rankings

- Bitcoin Core 0.21: 5 hours, 41 minutes

- Gocoin 1.9.8: 7 hours, 38 minutes

- Bcoin 2.1.2: 21 hours, 52 minutes

- Libbitcoin Node 3.2.0: 1 day, 12 hours, 48 minutes

- Parity Bitcoin 7fb158d: 2 days, 11 hours, 22 minutes

- BTCD v0.21.0-beta: 3 days, 11 hours, 31 minutes

- Stratis 3.0.6: Did not complete; definitely over a week

The only upset this year was in Gocoin's favor as it has zoomed ahead and snatched second place from Bcoin.

Delta vs Last Year's Tests

We can see that most implementations are taking longer to sync, which is to be expected given the append-only nature of blockchains. Remember that the total size of the blockchain has grown by 25% since my last round of tests, thus we would expect that an implementation with no new performance improvements or bottlenecks should take ~25% longer to sync.

- Gocoin 1.9.8: -12 hours, 18 minutes (62% shorter)

- Bitcoin Core 0.21: -58 minutes (15% shorter)

- Bcoin 2.1.2: +3 hours, 23 minutes (18% longer)

- BTCD v0.21.0-beta: +8 hours, 19 minutes (11% longer)

- Parity Bitcoin 7fb158d: +9 hours, 12 minutes (18% longer)

- Libbitcoin Node 3.2.0: +9 hours, 11 minutes (33% longer)

As we can see, it looks like every implementation has made at least some performance improvements with the exception of Libbitcoin and Stratis, which from their github activity show very little development happening in the past year.

Exact Comparisons Are Difficult

While I ran each implementation on the same hardware to keep those variables static, there are other factors that come into play.

- There’s no guarantee that my ISP was performing exactly the same throughout the duration of all the syncs.

- Some implementations may have connected to peers with more upstream bandwidth than other implementations. This could be random or it could be due to some implementations having better network management logic.

- Not all implementations have caching; even when configurable cache options are available it’s not always the same type of caching.

- Not all nodes perform the same indexing functions. For example, Libbitcoin Node always indexes all transactions by hash — it’s inherent to the database structure. Thus this full node sync is more properly comparable to Bitcoin Core with the transaction indexing option enabled.

- Your mileage may vary due to any number of other variables such as operating system and file system performance.

Conclusion

Given that the strongest security model a user can obtain in a public permissionless crypto asset network is to fully validate the entire history themselves, I think it’s important that we keep track of the resources required to do so.

We know that due to the nature of blockchains, the amount of data that needs to be validated for a new node that is syncing from scratch will relentlessly continue to increase over time. Thus far the tests I run are on the same hardware each year, but on the bright side we do know that hardware performance per dollar will also continue to increase each year.

It's important that we ensure the resource requirements for syncing a node do not outpace the hardware performance that is available at a reasonable cost. If they do, then larger and larger swaths of the populace will be priced out of self sovereignty in these systems.